by Jim X. Chen, Daniel B. Carr, Xusheng Wang

George Mason University

Fairfax, VA 22030-4444

B. Sue Bell and Linda W. Pickle

National Cancer Institute

Bethesda, MD 20892-8317

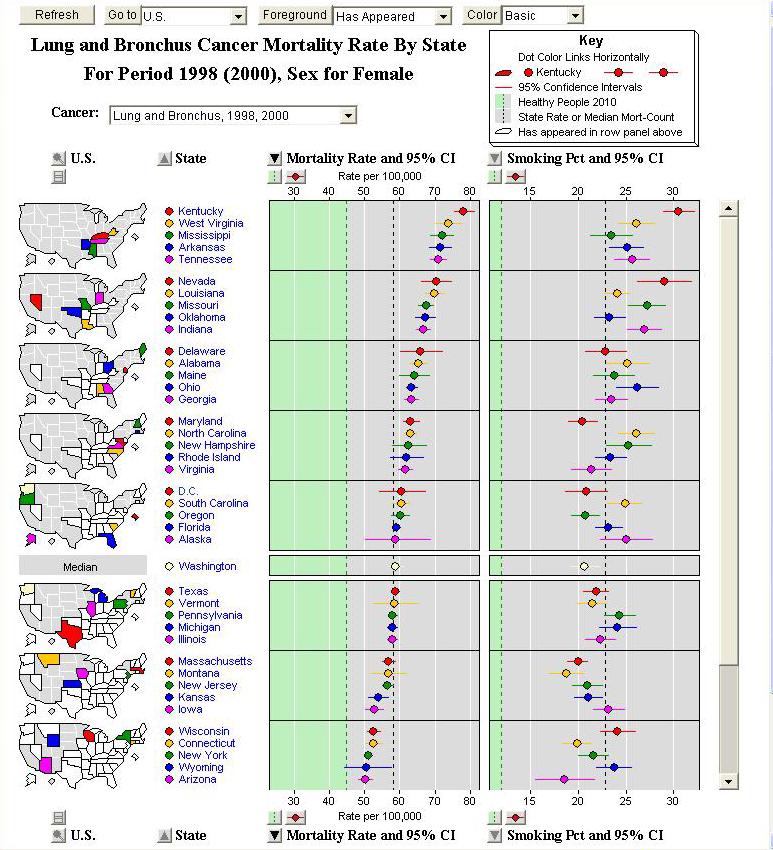

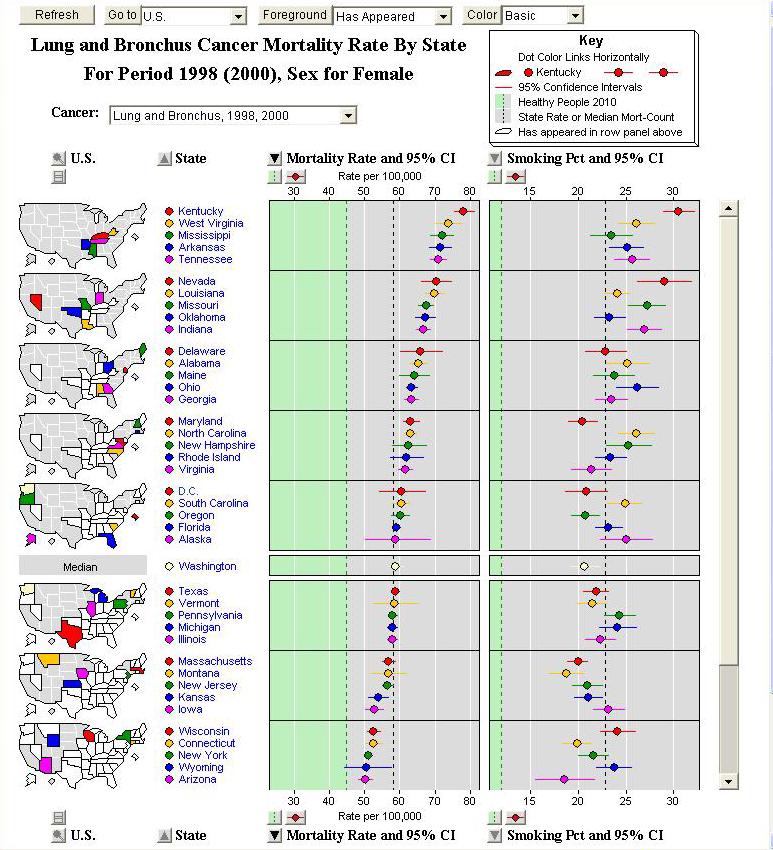

Linked Micromap plots (LM plots) present a template for the display of spatially

indexed statistical summaries. This template has four key features [1]. First,

LM plots include at least three parallel sequences of panels (micromap, lable,

and statistical summary) that are linked by position. The second feature is

sorting the units of study. The third feature partitions the study units into

panels to focus attention on a few units at a time. The forth feature links

the highlighted study units across corresponding panels of the sequences.

LM plots represent a new visualization methodology that is useful in the data

and knowledge based pattern representation and knowledge discovery process.

It can be used to visualize various complex data in many areas [1, 2]. Interactive

LM plots can let readers clearly view and compare various relationships among

the study units.

Using LM plots to display the federal cancer statistical summaries is an effective

application. In this article, we nonetheless describe a web-based LM plots

application that is developed for the National Cancer Institute (NCI) to visualize

the cancer statistical summaries of the United States and all the states on

the Internet. These web-based LM plots not only present all the key features

of LM plots, but alsoshow the higher interactivity. Moreover, the magnified

micromap and the overall look of statistical summaries make snternet SSince

web is a public information, inventory, atusing LM plots on the web will make

more readers share this effective visualization methodology, and bring this

methodology to a more practical environment.

by Jim X. Chen, Yanling Liu, and Lin Yang

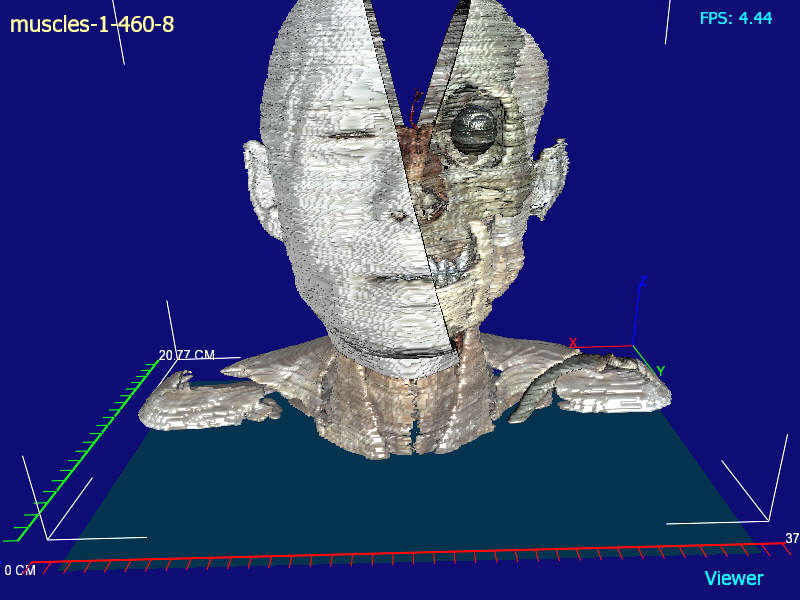

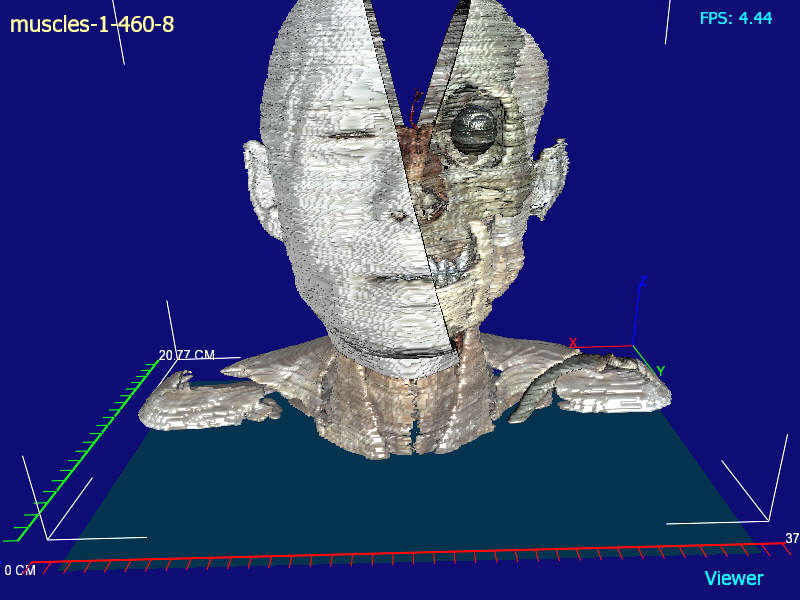

To learn human anatomy, medical students must practice on cadavers, as

must physicians when they want to brush up on their anatomy knowledge. However,

cadavers are in short supply in medical schools worldwide. Virtual anatomy

and surgery can potentially solve this problem. We present a system VHASS

(Virtual Human Anatomy and Surgery System) based on reconstructing the human

body using cryosection images. By constructing 3D models that include details

of human organs, we can give medical students and physicians unlimited access

to realistic virtual cadavers.

Currently, many systems can reconstruct the human body via magnetic resonance

imaging (MRI) or computerized tomography (CT). However, these systems have

a common drawback in that they can’t group human body components and

display human tissues in their natural colors. Consequently, technicians

have difficulty in identifying human body components clearly and must assign

artificial colors when generating 3D models from these images, which might

look good, but are unrealistic and hinder anatomy and surgery simulation

and training. Our virtual surgery system VHASS provides a better platform

for virtual anatomy because it dissects all human organs according to their

anatomic structures, separates human tissues within cryosection images,

reconstructs a 3D mesh surface for each part, and, finally, renders each

part as a high-quality 3D model, generating all parts and tissues with their

natural colors.

Reference:

L. Yang, J.X. Chen, and Y. Liu, “Virtual Human Anatomy,” IEEE

Computing in Science and Engineering, vol. 7, no.5, Sept. 2005, pp. 71-73.

by Jim X. Chen, Jingfang Wang, Xiaodong Fu, and Edward J. Wegman

(Funded by Office of the Provost at GMU; PI: Jim X. Chen)

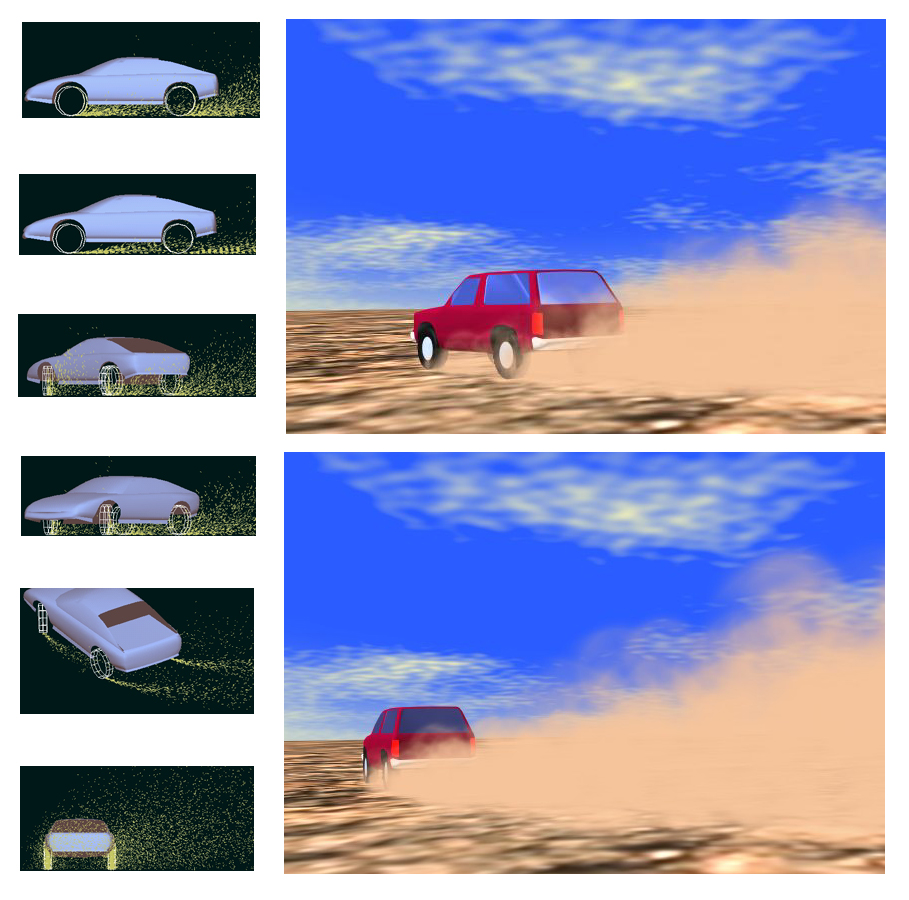

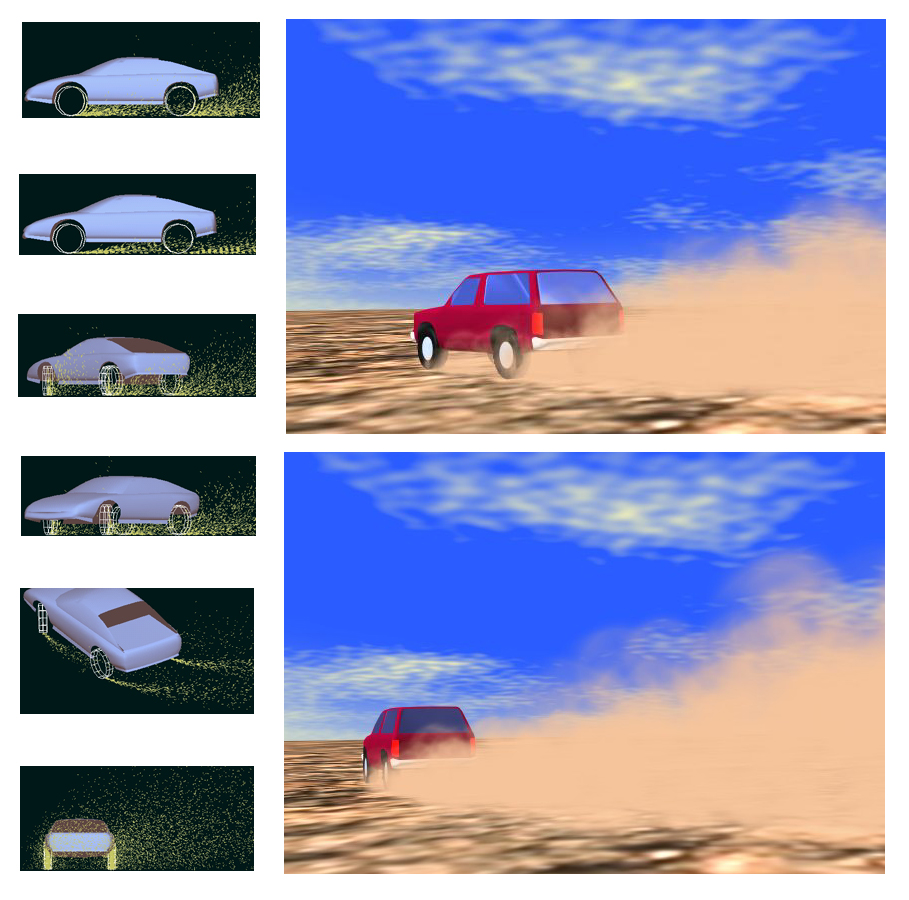

Simulation of physically realistic complex dust behavior is very useful

in training, education, art, advertising, and entertainment. There are no

published models for real-time simulation of dust behavior generated by

a traveling vehicle. We use particle systems, computational fluid dynamics,

and behavioral simulation techniques to simulate dust behavior in real time.

First, we analyze the forces and factors that affect dust generation and

the behavior after dust particles are generated. Then, we construct physically-based

empirical models to generate dust particles and control the behavior accordingly.

We further simplify the numerical calculations by dividing dust behavior

into three stages, and establishing simplified particle system models for

each stage. We employ motion blur, particle blending, texture mapping, and

other computer graphics techniques to achieve the final results. Our contributions

include constructing physically-based empirical models to generate dust

behavior and achieving simulation of the behavior in real time.

Reference:

J.

X. Chen, X. Fu, and E. J. Wegman, "Real-Time Simulation of Dust Behaviors

Generated by a Fast Traveling Vehicle," ACM Transactions on Modeling

and Computer Simulation, Vol. 9, No. 2, April, 1999, pp.

81-104.

by Jim X. Chen and Ying Zhu

(Funded by Knee Alignment of Greater Washington, PI: Jim

X. Chen)

We use computer graphics, physics-based modeling, and interactive visualization

to assist the knee surgery study and operation. Current static examinations

of the knee such as X-rays and MRI's are replaced by interactive visualization,

surgery study, planning, exercise, and results predictions. First, a set of

magnetic resonant image (MRI) slices of the knee, the height and weight of

the patient, and other input data will be collected from the patient. Then,

a 3D knee surface model will be constructed from the MRI slices and a standard

reference knee model. After that, animations of the gait cycles will be generated

with interactive visualization of the pressure distributions at the knee joint,

which are calculated according to the current posture. At the same time, a

virtual surgery can be performed, data concerning the surgery process can

be recorded, and the simulation and visualization can demonstrate the gait

cycles and the modified pressure distributions right after the surgery.

Reference:

J.X. Chen, H. Wechsler, J.M. Pullen, Y. Zhu, E.B. MacMahon, “Knee

Surgery Assistance: Patient Model Construction, Motion Simulation, and Biomechanical

Visualization,” IEEE Transactions on Biomedical Engineering, vol.

48, no. 9, Sept. 2001, pp. 1042-1052.

Y.

Zhu, J. X. Chen, S. Xiao, and E. B. MacMahon, "3D Knee Modeling and Biomechanical

Simulation," IEEE Computing in Science and Engineering, Vol. 1, July/August,

No. 4, 1999, pp. 82-87.

by Jim X. Chen

(Funded by US Army STRICOM)

We present a new method for real-time fluid simulation in computer graphics

and dynamic virtual environments. By solving the 2D Navier-Stokes equations

using a computational fluid dynamics method, we map the surface into 3D using

the corresponding pressures in the fluid flow field. This achieves realistic

real-time fluid surface behaviors by employing the physical governing laws

of fluids but avoiding extensive 3D fluid dynamics computations. To complement

the surface behaviors, we calculate fluid volume and external boundary changes

separately to achieve full 3D general fluid flow. Unlike previous computer

graphics fluid models, our model allows multiple fluid sources to be placed

interactively at arbitrary locations in a dynamic virtual environment. The

fluid will flow from these sources at user modifiable flow rates following

a terrain which can be dynamically modified, for example, by a bulldozer.

Our approach can simulate many different fluid behaviors by changing the internal

or external boundary conditions. It can model different kinds of fluids by

varying the Reynolds number. It can simulate objects moving or floating in

fluids. It can produce synchronized general fluid flow in a distributed interactive

simulation.

Reference:

J. X. Chen, N.

V. Lobo, C. E. Hughes and J. M. Moshell, "Real-time Fluid Simulation in

a Networked Virtual Environment," IEEE Computer Graphics and Applications,

Vol. 17, No. 3, 1997, pp. 52-61.

J. X. Chen

and N. V. Lobo, "Toward Interactive-Rate Simulation of Fluids with Moving

Obstacles Using Navier-Stokes Equations," CVGIP: Graphical Models and

Image Processing, Vol. 57, No. 2, 1995, pp. 107-116.

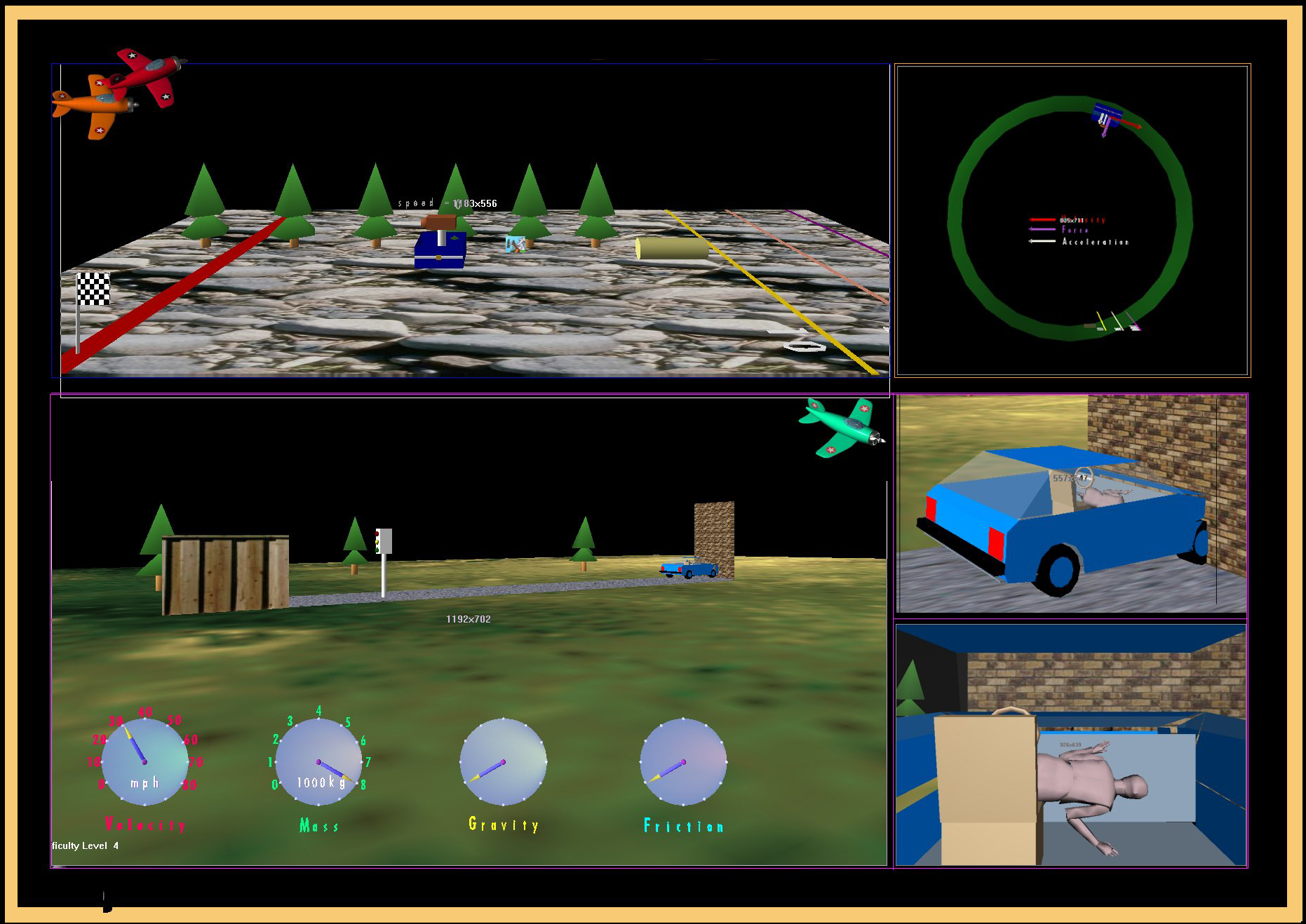

DEVISE: Designing Environments for Virtual

Immersive Science Education

by Mike Behrmann, Jim X. Chen, Chris Dede, Debra Sprague,

Xusheng Wang, and Shuangbao Wang

(Funded by US Department of Education; PI Mike Behrmann;

Co-PI Jim X. Chen, Chris Dede)

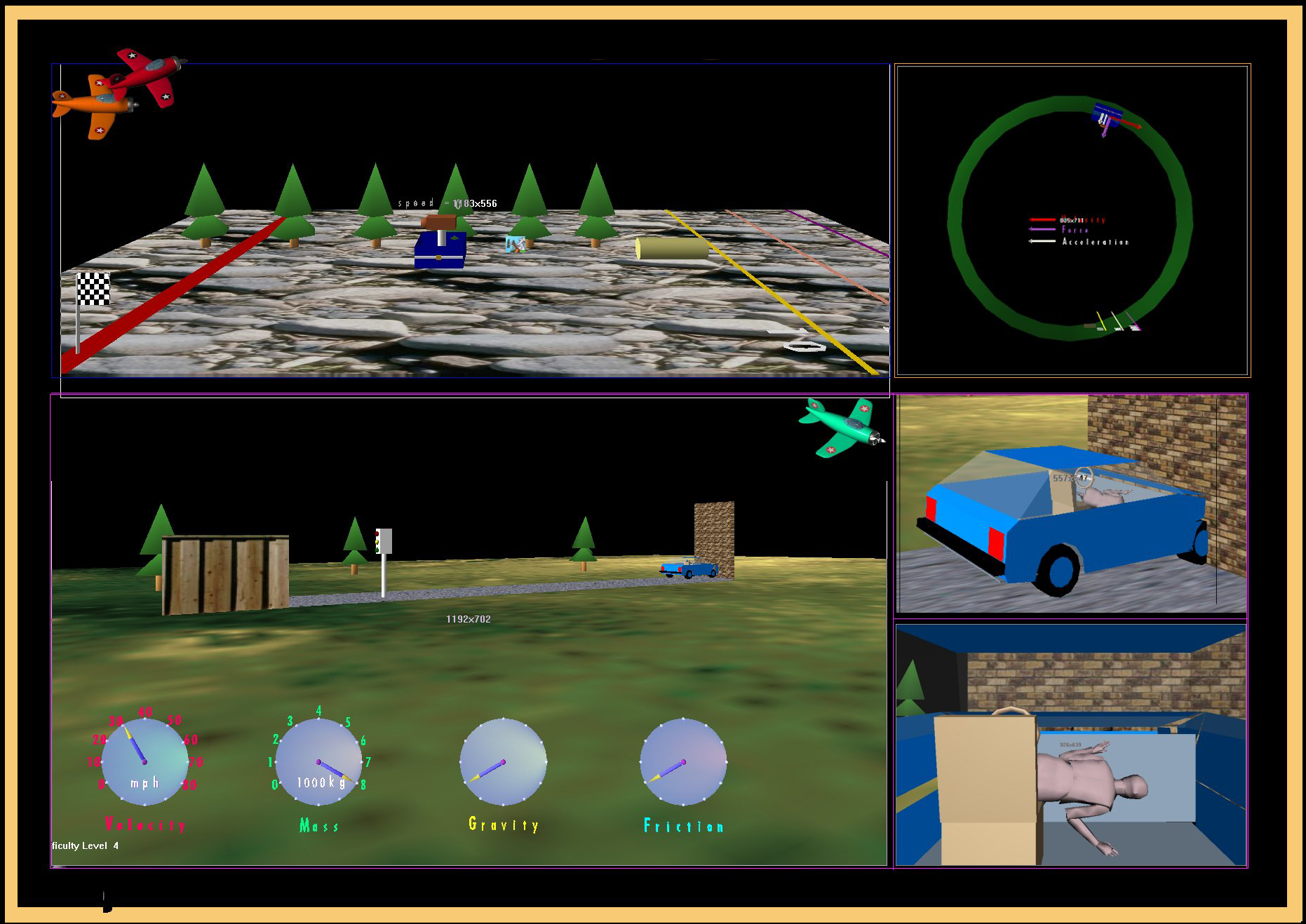

Students with learning disabilities continue to fall behind regular

education students as they move into more cognitively competing areas, such

as science and math instruction. Immersive virtual environments can increase

access for students in regular physics education curriculum, by providing

3D abstractions, for those concepts that cannot be represented in alternative

delivery formats. The adaptability and creation of new virtual tools have

the potential to provide students with learning disabilities access to the

regular science curriculum. This project builds immersive multi-sensory virtual

learning environments that address foundations of physics instruction for

students with learning disabilities.

by Chris Dede, Jim X. Chen, Kevin Ruess, Yonggao Yang, Xusheng

Wang et al.

(Funded by NSF; PI: Chris Dede; Co-PI: Jim X. Chen, L. Fontana,

D. Allison.)

This research project is creating and evaluating multi-user virtual

environments (MUVEs) that use digitized museum resources to enhance middle

school students' motivation and learning about science and its impacts on

society. MUVEs enable multiple simultaneous participants to access virtual

architectures configured for learning, to interact with digital artifacts,

to represent themselves through graphical "avatars," to communicate both with

other participants and with computer-based agents, and to enact collaborative

activities of various types. The project's educational environments are extending

current MUVE capabilities in order to study the science learning potential

of interactive virtual museum exhibits and participatory historical situations

in science units using the NSF-funded Multimedia and Thinking Skills (MMTS)

program, an inquiry-centered curriculum engine. George Mason University's

(GMU) Computer Graphics and Virtual Reality Labs, the Division of Information

Technology and Society in the Smithsonian's National Museum of American History

(NMAH), and pilot teachers from the Gunston Middle School in Arlington, Virginia

are co-designing these MUVEs and implementing them in a variety of middle

school settings. In particular, this project is studying how the design characteristics

of these learning experiences affect students' motivation and educational

outcomes, as well as the extent to which digitized museum can aid pupils'

performance on assessments related to national science standards. This research

also is examining both the process needed to successfully implement MMTS-based

MUVEs in typical classroom settings and ways to enable strong learning outcomes

across a wide range of individual student characteristics.

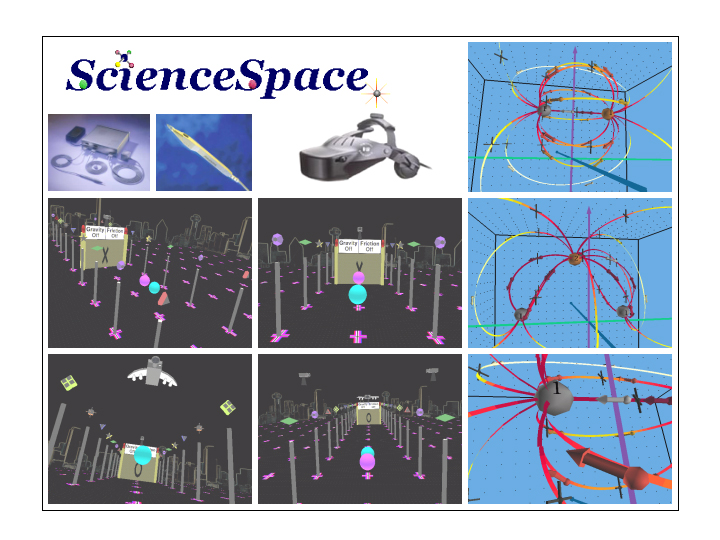

by Bowen Loftin, Chris Dede, Jim X. Chen, Xusheng Wang,

et al.

(Funded by NSF)

The purpose of Project ScienceSpace is to explore the strengths

and limits of virtual reality (sensory immersion, 3-D representation) as a

medium for science education. This project is a joint research venture

among George Mason University, the University of Houston, and NASA's Johnson

Space Center. Dr. Chris Dede from Harvard University is the project Co-Principal

Investigator and Dr. R. Bowen Loftin of the University of Houston is the Principal

investigator. Dr. Jim Chen and Mr Xusheng Wang have been funded on the project

and are responsible for developing several major components for this project.